Wearers can be placed in virtual and augmented reality headsets. The technology is already trendy due to its immersive nature. However, the future could see holographic displays that look more like real life. The Stanford Computational Imaging Lab combined its expertise in optics with artificial intelligence to create better presentations. The paper describing their latest achievements in this area is published in Science Advances today. It also contains work that will be presented in December at SIGGRAPH ASIAN 2021.

This research focuses on the fact that virtual and augmented reality displays only 2D images to the viewers’ eyes instead of showing 3D or holographic images, as we see in real life, the core of this research.

Gordon Wetzstein (associate electrical engineering professor) said they need to be perceptually accurate. He is also the leader of Stanford Computational Imaging Lab. Wetzstein and his collaborators are trying to bridge the gap between simulations and reality while creating visually more appealing and more accessible to see displays.

The Science Advances paper describes a method to reduce speckling distortion in laser-based holographic displays. Meanwhile, the SIGGRAPH ASIA paper proposes a way to represent better the physics applied in a 3D scene in the real world.

Realistic simulations and simulations are interconnected.

Over the past decade, image quality could have been better for existing holographic displays. Wetzstein explained that researchers have had to overcome the problem of making holographic displays look as good as LCDs.

It isn’t easy to control the form of light waves at high resolutions in holograms. Another problem that prevents high-quality holographic displays from being created is the difference between what happens in the simulation and what it would look like in real life.

Scientists have tried to develop algorithms to solve both of these problems before. Wetzstein and his collaborators also created algorithms, but they did so using a neural network, an artificial intelligence form that attempts to replicate how the human brain learns information. This is called neural holography.

Wetzstein stated that artificial intelligence had revolutionized engineering in all areas. “But, people have just begun to explore AI techniques in this particular area of the holographic display or computer-generated Holography.”

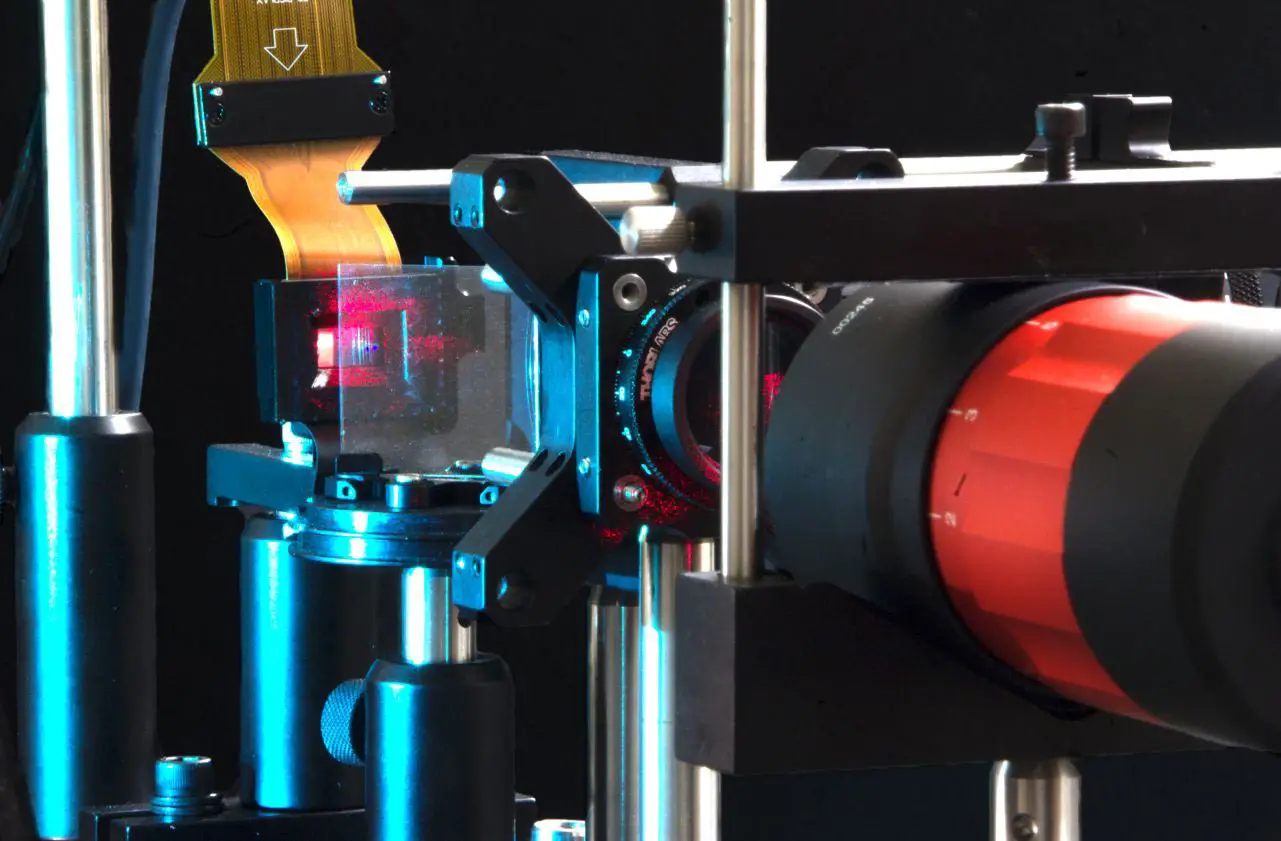

Yifan Peng is a postdoctoral researcher in the Stanford Computational Imaging Lab. He uses his multidisciplinary background in optics and computer science to design the optical engine used in holographic displays.

Peng is the co-lead writer of the Science Advances paper and a co-author of the SIGGRAPH article.

These researchers created the neural-holographic display by training a neural network to imitate real-world physics and achieve real-time images. This was then paired with a “camera in the loop” calibration strategy, which provides instant feedback that allows for adjustments and improvements. Researchers created an algorithm and a calibration technique to create realistic visuals that are more accurate in color, contrast, and clarity. This was done in real time with the image.

The SIGGRAPH Asia paper focuses on the first use of their neural-holography system to create 3D scenes. This system makes realistic, high-quality representations of locations that have visual depth.

The Science Advances work uses the same camera-in-the-loop optimization strategy, paired with an artificial intelligence-inspired algorithm, to provide an improved system for holographic displays that use partially coherent light sources – LEDs and SLEDs. These light sources can be attractive because of their size, cost, and energy requirements. They also avoid speckled images produced by systems that rely upon coherent light sources like lasers. However, blurred images without enough contrast can be caused by the same properties that make partially coherent source systems less likely to speckle. The researchers developed an algorithm specific to partially coherent light sources and created the first high-quality, speckle-free holographic 2D/3D images with LEDs and SLEDs.

Transformative potential

Peng and Wetzstein believe that combining new artificial intelligence techniques and virtual and augmented realities will be more common in many industries over time.

Wetzstein stated that he believes in wearable computing systems, AR and VR and that they will transform people’s lives. He said it might not be short, but Wetzstein believes that “augmented reality” is the “big thing.”

Although augmented virtual reality is most commonly associated with gaming, it and augmented realities have potential uses in many other fields, such as medicine. Augmented reality can be used by medical students for training and overlaying medical data from CT scans or MRIs directly onto patients.

Wetzstein stated that these types of technology are already used for thousands of surgeries yearly. We envision head-worn displays that can be worn on the head and are lighter, lighter, and more comfortable will be a significant part of future surgery planning.

Jonghyun Kim (a visiting scholar at Nvidia) said, “It’s fascinating to see the computation can improve display quality with the same hardware setup.” He is also a co-author of both papers. A better computation can result in a better display. This can have a significant impact on the industry.